In the realm of data science, the quest for accurate and insightful models is a constant pursuit. We strive to build predictive engines that can unravel complex relationships within data, leading to better decisions and improved outcomes. However, this pursuit often encounters a significant hurdle: overfitting. This occurs when a model becomes too closely tailored to the training data, failing to generalize well to unseen data. Enter Lasso Regression, a powerful tool that helps us navigate this tricky terrain by striking a delicate balance between accuracy and simplicity.

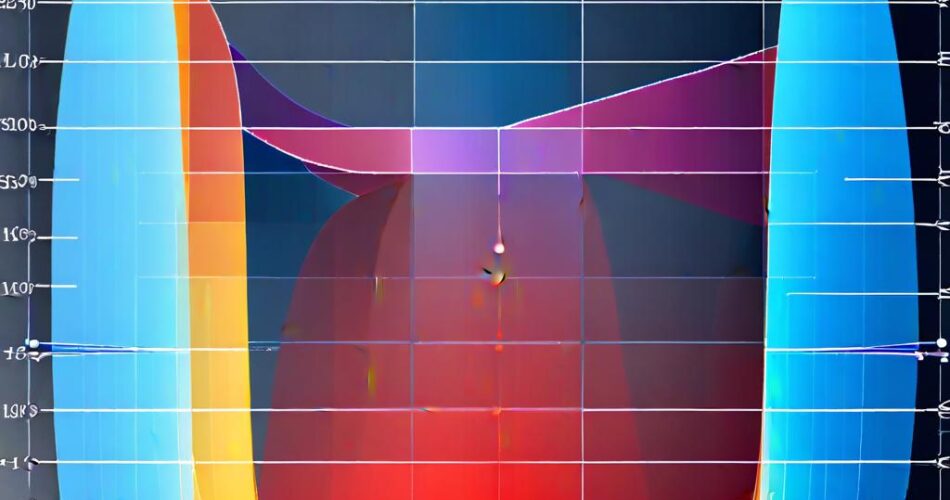

Lasso Regression, short for “Least Absolute Shrinkage and Selection Operator,” is a type of linear regression that adds a unique twist: it automatically performs feature selection. This means it identifies and eliminates irrelevant or redundant features, leading to a more parsimonious model. The magic lies in the “L1 regularization” technique, which penalizes the absolute values of the regression coefficients. This penalty encourages coefficients of less important features to shrink towards zero, effectively removing them from the model.

Benefits of Lasso Regression:

* Reduced Overfitting: By selecting only the most relevant features, Lasso Regression prevents the model from becoming overly complex and prone to overfitting. This results in better generalization to new data, leading to more reliable predictions.

* Improved Interpretability: A model with fewer features is inherently easier to understand. Lasso Regression helps identify the most influential variables, providing valuable insights into the underlying relationships within the data. This knowledge can be crucial for decision-making and understanding the driving forces behind the model’s predictions.

* Feature Selection: Lasso Regression acts as a built-in feature selector, automatically identifying and discarding irrelevant features. This saves time and resources compared to manual feature selection methods, which can be tedious and prone to bias.

* Handling High-Dimensional Data: Lasso Regression excels in handling datasets with numerous features. By shrinking unimportant coefficients, it effectively reduces the dimensionality of the data, simplifying the model and improving its performance.

Practical Applications:

Lasso Regression finds widespread applications across various domains:

* Finance: Predicting stock prices, identifying risk factors, and optimizing portfolio allocation.

* Healthcare: Diagnosing diseases, predicting patient outcomes, and personalizing treatment plans.

* Marketing: Targeting customers, optimizing advertising campaigns, and predicting customer churn.

* Engineering: Predicting product performance, optimizing manufacturing processes, and identifying potential failures.

Balancing Act:

While Lasso Regression offers significant advantages, it’s important to remember that it’s not a silver bullet. The regularization parameter, known as “alpha,” controls the strength of the penalty. Finding the right balance between accuracy and simplicity requires careful tuning of this parameter. Too high of an alpha value can lead to underfitting, where the model becomes too simplistic and fails to capture important relationships. Conversely, too low of an alpha value can result in overfitting.

Conclusion:

Lasso Regression empowers us to build more robust and interpretable models by striking a delicate balance between accuracy and simplicity. Its feature selection capabilities and regularization technique help us navigate the complexities of high-dimensional data, leading to better predictions and improved decision-making. By embracing this powerful tool, we can unlock the full potential of our data and gain a deeper understanding of the world around us.